Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Serverless computing (or, simply, serverless) is a way to allocate backend services in a dynamic, as-used fashion. Formally described by the Cloud Native Computing Foundation - CNCF - as ‘the concept of building and running applications that do not require server management’, there are two approaches to maximising its benefits: Host it yourself or use a cloud provider.

You might use serverless to receive inbound webhooks from external systems like Slack custom commands or SaaS based monitoring platforms, both of which may only be triggered very intermittently. Overall, organisations seem to be flocking to serverless not only because of the pay-for-what-you-use bonus, but also because serverless architectures are scalable, efficient and perform well overall. When your code is more efficient, the faster it runs and the less you pay. Boom.

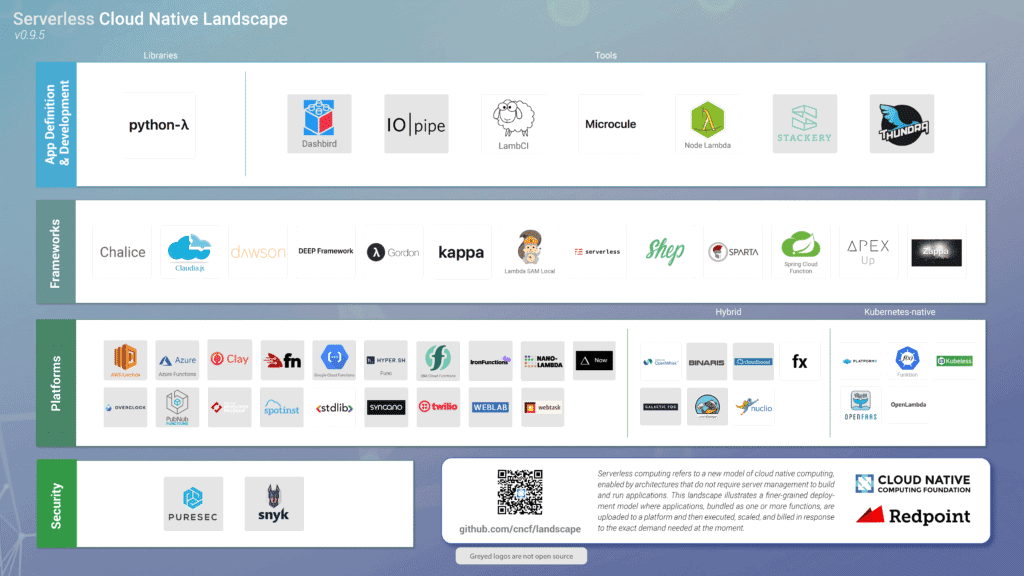

There’s also a flourishing and growing community around serverless which is heavily supported by the CNCF, as you can see in the below image ... so there's something to it! In this blog, we’ll cover what you need to know to make your mind up and potentially start utilising serverless.

Backend as a service is, well, a serverless backend. Fairly self explanatory. While there are some overlapping elements between BaaS and serverless computing, they’re not quite the same.

It’s true that in both serverless and BaaS, developers only need to write application code and don’t have to worry at all about what’s going on in the backend. And BaaS provides some extra serverless computing services, but it is not true serverless.

If BaaS isn’t truly serverless, so what about Function as a Service?

FaaS is a bit newer to the scene, a concept that was only made available in 2014 (thanks to hook.io) but, even yet, it’s also often considered synonymous with serverless. But, like BaaS, FaaS is a way to achieve serverless computing, which it does by executing modular pieces of code on the edge.

FaaS allows developers to quickly write code on the go, so you can be ultra-responsive without having to build or maintain a complex infrastructure. This easy way to scale code is a cost-efficient way to start implementing microservices.

We’ve established the basis of what serverless is, and certainly what it isn’t. When you’re looking at implementing a true serverless architecture, these are the core principles you should be looking at:

Hostless is serverless and serverless is hostless. There’s no centralised architecture, removing the costs of catering to a server fleet.

Elasticity is a symptom, a positive one at that, of being hostless. With your serverless services, this means you’ll be able to move up and down and up and down to meet demand - mostly automatically.

Serverless is distributed by design, with smaller functions.

In principle, developers are enabled to focus on event-driven functions within a serverless environment.

Because serverless systems don’t require server management and maintenance, they can be cost-efficient.

One of the biggest upsides of serverless, especially exciting for developers, is that devs can write code in whatever they way they choose.

As we’ve already established, a serverless architecture means that you don’t have to worry about any infrastructure. Once you write and deploy your code, your service scales up and down automatically based on demand. Amazing.

Like with scaling, what goes up must come down.

If you’re using a hosted cloud provider, it becomes harder to disconnect as you become more and more invested. That freedom, flexibility and scalability that is associated with serverless is then slightly obscured by the fact that the cloud provider hosts, provisions and manages all of the resources for running your apps.

Because it’s still in its infancy, many organisations see serverless as an ever-evolving as a ‘work in progress’. With this approach, it might take awhile to see positive results of the serverless switch. Confusion might also be attributed to the fact that there’s not a lot of formalised training or learning around to help teams cope with the ins and outs.

As mentioned above, one of the biggest plus-sides is that it takes very little time or effort to get started. There’s a handful of service providers and platforms (both hosted and installable) that can be utilised to deploy serverless architecture, which we’ll breeze through below.

If you’re using cloud-provider based services, Amazon Web Services (AWS), Microsoft Azure (Azure) and Google Cloud (GCP) would execute a piece of code that allocates resources and only charges for the amount of resources used to run that code.

The public cloud providers offer a wide range of services and tools to relieve or obscure infrastructure management tasks and allow users to make the most of serverless.

You’re not just limited, however, to the services provided by cloud providers themselves. There’s also a host (pun intended) of installable serverless platforms to integrate with, which give you more flexibility and control than their cloud-provided counterparts.

Read also: Serverless frameworks on Kubernetes

When you adopt a serverless architecture, you’re gaining a whole new world of efficiency. But, perhaps at the cost of visibility and even control.

If you have a strong case for serverless architecture, then the question is whether you are happy with a hosted or installable serverless platform. A hosted platform provides essential guidance to deploying well-defined serverless architectures and built-in capabilities such as logging and monitoring.

It also reduces operational overhead, but at the cost of being locked-in to the cloud provider. An installable platform conversely provides flexibility to choose non-cloud-provider services and/or open-sourced applications to integrate with as part of your serverless architecture. An installable platform requires more technical expertise and can end up costing more with the engineering effort required.

It’s really up to the needs and resources in your individual business or teams. In any case, Appvia Kore Operate can help you get started quicker. If you opt for a hosted serverless platform, you can utilise Kore Operate in conjunction with cloud services like AWS API Gateway and AWS Lamda. If you choose to install your own serverless platform, such as Kubeless or Knative, Kore secures your Kubernetes cluster in the cloud provider of your choice.