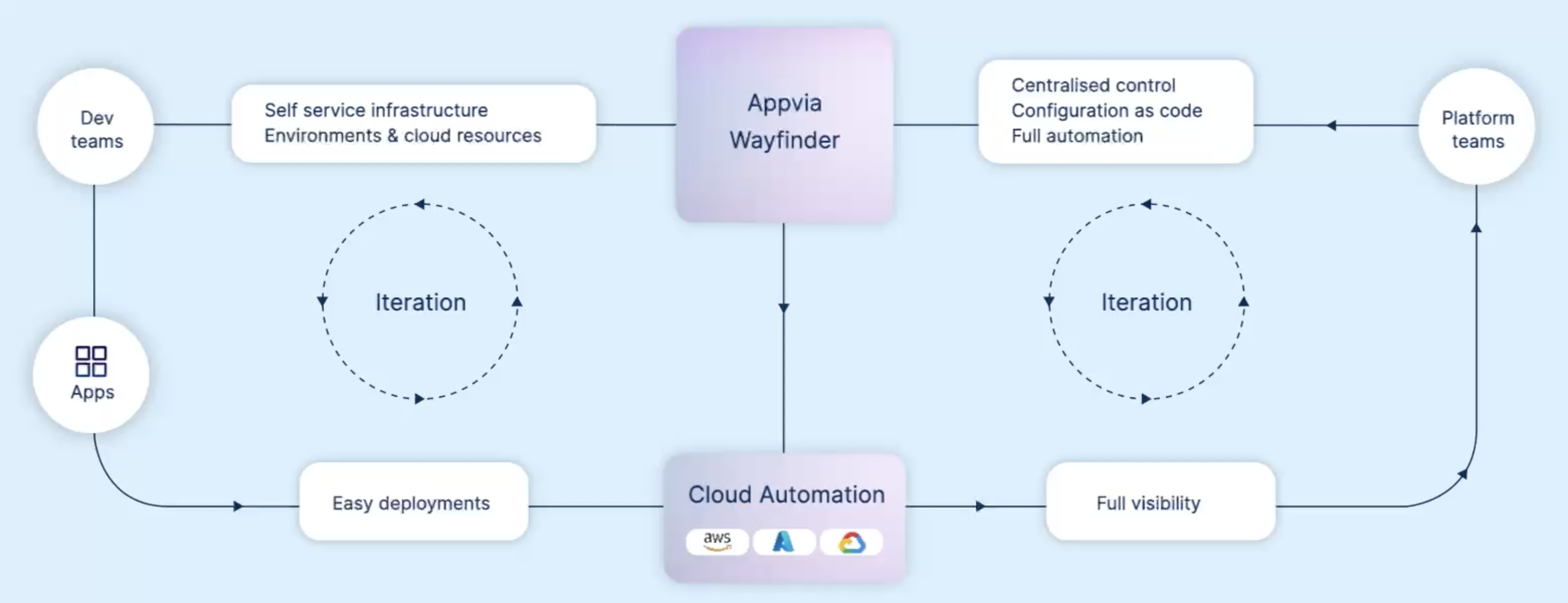

End-to-end self service for developers, scalable for platform teams, and secure by design.

.png)

Give the right level of freedom to your Developers with integrated policy. Keep costs low and your security posture high.

Integrate Wayfinder into existing tools easily. Use self-service choices and system information to create dynamic configuration of services.

Use ephemeral environments to keep costs low and move quickly through to production.

.png)

Have self-service Kubernetes clusters with all the policies and custom integrations in place.

.png)

Director, Enterprise Technology

Appvia have shown a drive to not just innovate within the service they provide but provide guidance and assistance to other parts of the organization to promote both product and agile delivery.

.png)

Head of Platform Engineering

Appvia have helped us realise our cloud-first strategy with the excellent product solutions they have implemented at the Bank of England. The security, governance and peace of mind brought in by the cloud landing zone, and paired with the DevOps culture that they’ve brought in the Bank, we’ve dramatically accelerated our journey to cloud-native adoption.

Lead Machine Learning Engineer

Wayfinder is straightforward to use, with an intuitive GUI that allows us to easily have a holistic view of the state of the clusters.